How Safe Are Autonomous Cars in Real Traffic?

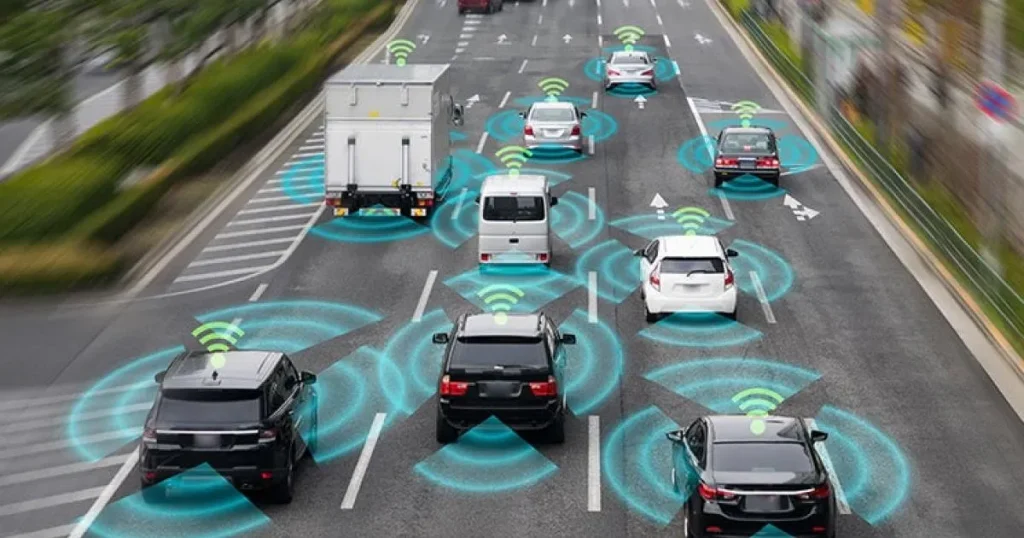

New research on autonomous cars reveals how they respond in real-world traffic crashes, raising questions about safety and decision-making.

As autonomous cars edge closer to mainstream adoption, their real-world safety performance remains a critical question. While simulations and controlled tests have shown promising results, translating these findings into unpredictable urban environments is far more complex. A study published in Accident Analysis & Prevention explores how these vehicles behave in actual crash scenarios, and whether they make safer decisions than human drivers.

The study simulated various crash conditions using realistic traffic data and modeled how different autonomous driving systems—some using AI-based decision logic—responded to collision risks. The results revealed that while autonomous cars often performed better than average human drivers in avoiding crashes, their behavior was not flawless and sometimes prioritized safety differently than humans might.

Decision-Making in High-Stress Scenarios

One key insight is that autonomous cars rely heavily on pre-programmed rules or machine learning models trained on historical data. This can limit their flexibility when unexpected or ethically challenging situations arise—like choosing between two risky outcomes. The vehicles’ decision trees often reduce such dilemmas to risk-minimization problems, which may not always align with human moral instincts.

Interestingly, the research also highlighted that some autonomous cars failed to execute proper evasive maneuvers when presented with rare or complex crash setups. While most systems successfully recognized and responded to typical obstacles, edge cases such as cross-path intrusions or multi-vehicle chain reactions caused inconsistent results.

Are Autonomous Vehicles Truly Safer?

Despite the limitations, the study confirmed that autonomous cars generally reduce the likelihood of human-error-based crashes. These systems maintain safe following distances, monitor blind spots with more accuracy, and never suffer from distractions or fatigue. Yet, their inability to reason like a human means their reactions may not always match public expectations, especially when moral judgment is involved.

Moreover, current autonomous technologies are still being refined for variable road conditions, such as poor weather or unpredictable pedestrian behavior. While developers continue to improve sensor fusion and AI logic, full self-driving capability under all conditions remains a work in progress. This suggests autonomous cars are safest when paired with human oversight in the near term.

The Path Toward Safer Integration

For autonomous cars to safely coexist with human-driven vehicles, the industry needs unified testing standards that simulate diverse real-world conditions. Regulators and manufacturers must collaborate on crash-avoidance benchmarks, ethical decision protocols, and transparent safety reporting. This helps build public trust and ensures vehicles are tested not only in labs but also against complex, messy traffic realities.

In the long run, combining artificial intelligence with ethical reasoning frameworks may help close the gap. Future systems could incorporate context-aware risk models, learning not only from collisions but also from near-misses and human driving patterns. Until then, autonomous cars represent a promising but still-evolving solution to modern traffic safety challenges.